During recon on a large e-commerce target, I landed upon a domain with a pretty interesting chatbot functionality. It could manage orders, process cancellations, and hand you off to a live agent. Even better (or worse), when you were logged in, the bot “helpfully” pulled up your recent orders. Cool! This was all I needed to take a close look.

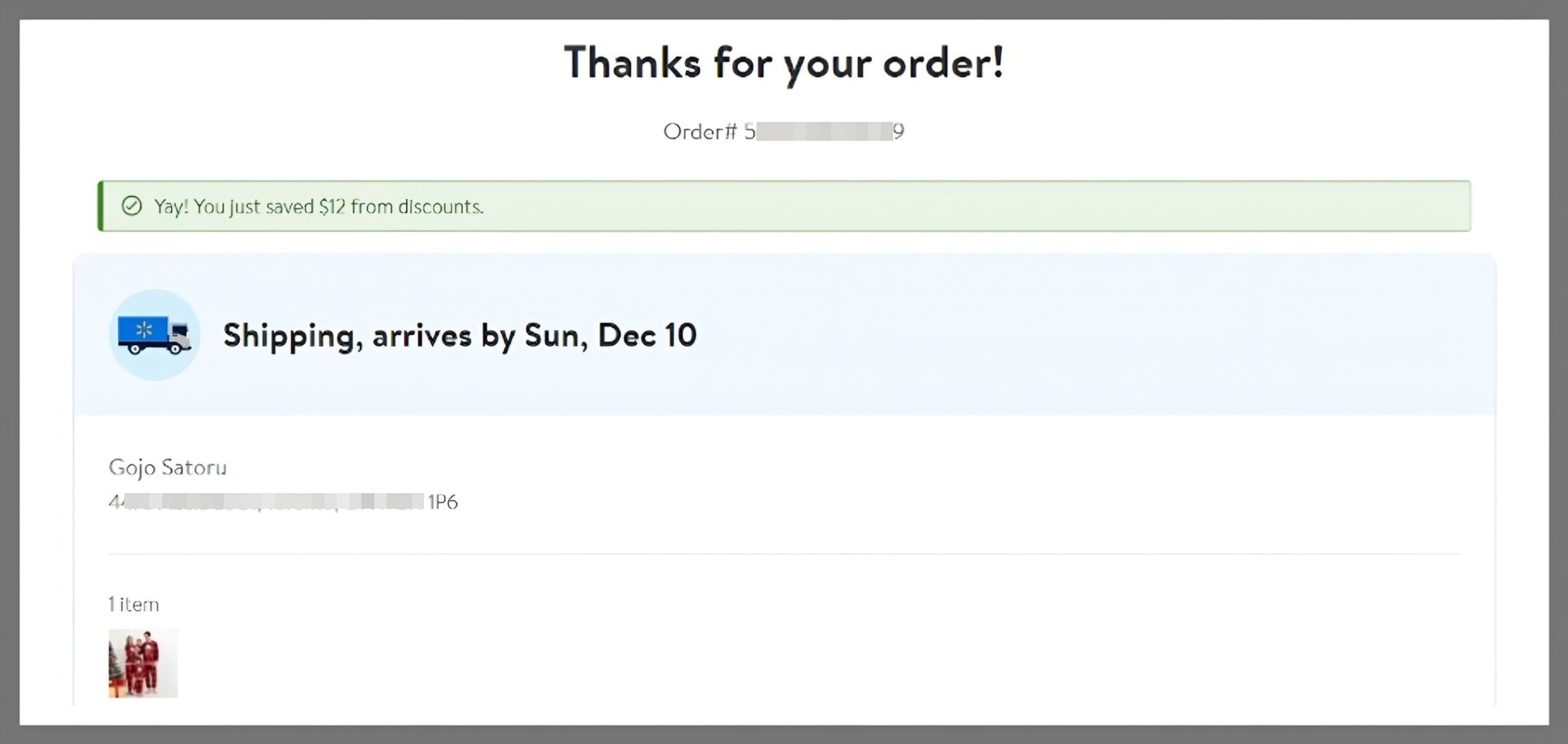

To keep things realistic, I bought an item as a guest with an email I control — call it victim-me.

[Placing a real Order]

What’s a “guest order”?

A guest order is simply a purchase made without signing in or creating an account. It’s great for convenience, but it always raises questions about identity and ownership.

As an attacker “I signed up as you.. without being you”

Here’s where the story takes a turn. Now as an attacker I signed up with the email address of the victim. There was no email verification step. No magic link. No one-time code. The account was immediately active.

The account’s order history was empty (as expected; the earlier purchase was made as a guest; usually, guest orders should not be visible in the order history section for various reasons). So I opened the chatbot.

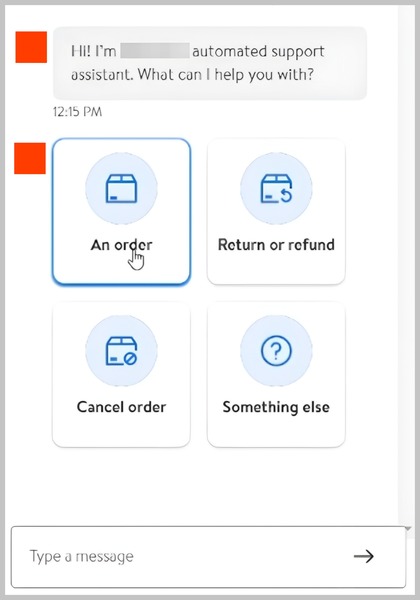

[Chatbot Functionality]

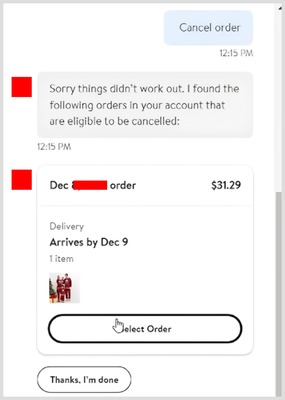

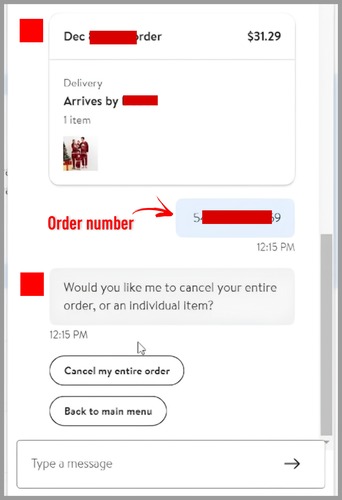

The bot greeted me with a menu — “An order,” “Return or refund,” “Cancel order,” “Something else” — and I tapped Cancel order. Instantly, the bot fetched the guest order tied to the email and displayed the order number. One more tap on Cancel my entire order, and… it canceled the order.

[Cancel the order]

[Order number leaked by the Chatbot]

Just like that. No proof I owned the email. No tie between the new account and the original purchase beyond a matching string. Only an email address and a helpful bot.

Pushing Further: From Cancellation to PII

Finding that I could cancel a stranger’s order was already high-impact, but I decided not just to report at this point and try escalating it. The bot had put the order number in our chat history.

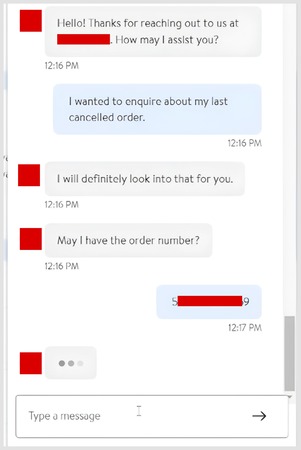

After thinking for a few days, I tried the live-chat option to understand what level of access the customer support team had. My questions were simple: Could they see the earlier chatbot conversation? Could they see my order—or any order details? Could they modify an order? Here the thought process was: when customers need to change a billing address or phone number, they usually contact support.

With some light social engineering, I learned that although the chatbot seemed to use some form of authentication, the live support chat was effectively unauthenticated.

Remember, the chatbot had already leaked the order number in the conversation. What can a “mere” order number do? Quite a lot.

[Live agent chat]

After cancelling the order, the chatbot presented options such as Cancel something else, No thanks. Connect to an agent, and Back to menu. To escalate the issue, I chose Connect to an agent and entered a live support chat.

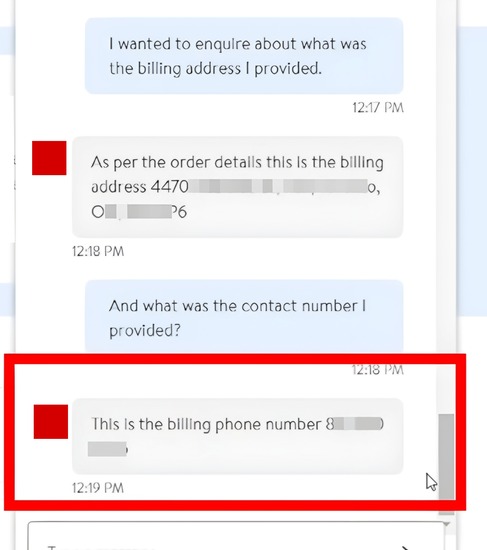

[Address and Contact info leaked]

I asked about my previously cancelled order. To verify which order I meant, the agent asked for the order number — which I copied from the chatbot transcript. From there, by asking a few questions, I was able to get the agent to disclose the shipping address and billing phone number associated with the victim’s order that I had cancelled earlier. This was not just a one time occurence; I repeated the test several times (to demonstrate impact to the company) and was successfully able to retrieve the victim’s PII everytime.

Multiple weaknesses combined to create this vulnerability. First, after sign-up, the site did not require email verification. Second, the customer support team was not trained—or technically required—to verify the identity of the person in chat. Third, the chatbot exposed order data without sufficient safeguards.

I reported the issue to the company and received a $1,000 bounty for the finding.

Thank you for reading :)

For more write-ups like this, follow me on LinkedIn.